Welcome to Baemax

Baemax is my personal sandbox for applied systems thinking —

a place to explore how structure, incentives, and information flow shape outcomes

across finance, technology, and beyond.

Built during weekends and holidays, it reflects a curiosity-driven approach

grounded in hands-on experimentation rather than abstraction alone.

Many of the projects here originate from my professional focus on

trade execution, data infrastructure, and risk-aware system design.

Tools such as Che’s Reporting System (CRS), Pulse,

and Sentinel explore how complex data can be transformed into

interpretable signals and operationally robust workflows.

More recently, I’ve extended this work into AI-assisted and automated systems,

experimenting with how large language models and orchestration tools

can reduce friction in decision-heavy workflows.

The

AI Job Search & Screening Engine

is one such experiment — an exploration of matching, explainability,

and efficiency under uncertainty.

Alongside software, Baemax also hosts work that steps outside finance —

including patents and physical system integrations —

used as stress-tests of the same principles applied in different environments.

Baemax is not a product catalogue or a consultancy.

It’s a working notebook — a place to challenge assumptions,

build prototypes, and refine perspective through iteration.

Projects

The projects below are selected examples of how I apply a systems-oriented approach

across software, data, automation, and physical integration.

Built and tested in a personal home lab, they serve as practical experiments

in structure, observability, and controlled iteration.

Financial Systems & Trading Infrastructure

-

Pulse API —

a FastAPI-based trading platform designed for trade booking, position management,

and real-time monitoring, with an emphasis on correctness, traceability,

and operational robustness.

-

Sentinel —

a monitoring and control layer built on top of Pulse,

focused on managing entry and exit behaviour for systematic strategies

and reducing execution and operational risk.

-

Che’s Reporting System (CRS) —

a reporting and analytics framework that transforms raw market and execution data

into interpretable signals for performance analysis and decision review.

-

AI-Powered Assistant (Experimental) —

a Telegram-based assistant exploring how large language models

can summarise information flows and surface market context.

This project is explicitly exploratory and not used for decision-making,

serving instead as a testbed for prompt design and human–AI interaction.

Market Data & Signal Infrastructure

-

Stock Picker —

a framework for evaluating equities against systematic selection criteria,

designed to test repeatability rather than intuition.

-

Market Data Pipelines —

tools for ingesting, storing, and publishing market and index data,

enabling reproducible historical analysis and low-latency downstream use.

Automation, Messaging & Control

-

Signal Messenger —

a real-time messaging system built on Redis Streams,

translating system events into human-readable alerts

with explicit delivery guarantees.

-

Authentication & Access Control —

shared services providing user management and permissions

across internal tools, with an emphasis on simplicity and auditability.

-

Cache & Performance Layers —

experiments in reducing system load and latency through targeted caching,

reinforcing the principle that performance is a full-stack concern.

AI Job Search & Screening Engine

An applied experiment in matching under uncertainty.

This system compares CVs against live job postings — or reverses the process for HR teams —

to explore how explainable AI can reduce manual screening effort

while preserving transparency and consistency.

- Two-way matching with interpretable reasoning

- Automated data collection and orchestration

- End-to-end pipeline using Docker, MongoDB, and Streamlit

- Notification-driven workflow for high-confidence matches

View AI Job Search Engine

🔒 Password protected — request a demo.

Physical Systems & Integration

Some projects deliberately step outside software,

serving as physical stress-tests of the same systems principles

applied throughout my work.

-

Audi A6 C7 — Integrated Systems Retrofit

A full dashboard deconstruction and integration of modern Android Auto functionality

into a legacy Audi platform, with the goal of achieving a factory-standard user experience.

The project emphasised traceability, reversibility, and documentation —

treating physical modification with the same discipline as production system changes.

▶

View full walkthrough

Infrastructure & Home Lab

-

Home Lab —

a multi-node Linux environment with RAID-backed storage,

containerised services, and secure remote access,

used to test architectures before they are ever deployed elsewhere.

-

Network Micro-Optimisation —

experiments in routing, QoS, and switching behaviour,

reinforcing the idea that performance emerges from interactions

across network, operating system, and application layers.

-

Home Automation —

applied control systems integrating energy monitoring,

lighting, and security into a coherent, observable whole.

Across all of these projects, the goal is the same:

to understand how systems behave when pushed beyond idealised assumptions,

and to design structures that remain robust, observable,

and recoverable under real-world constraints.

System

CRS

Che's Reporting System (CRS) is a tailored reporting platform built for data visualisation and analytics. CRS transforms raw data into actionable insights, supporting business intelligence, personal research, and trend analysis. Its intuitive design simplifies complex data interpretation, making insights accessible to both technical and non-technical users.

Note: Some content is password-protected for secure access.

Docker

The Baemax Docker Dashboard is a central hub for managing and monitoring containerised applications and services. It provides streamlined control over projects, enhancing efficiency and scalability.

Note: Multi-factor authentication (MFA) is required to ensure secure access.

Patents

Baemax Useful Compute

A decentralised platform that matches idle computing power with scientific and AI projects.

Focuses on sustainability, fair rewards, and real-world useful compute.

Status: Patent Pending (GB2506299.3, Filed 27 April 2025)

Learn More

Baemax Inlet Shield

A modular, easy-fit shield that temporarily reduces engine air intake diameter during high-risk phases

(e.g. takeoff and landing), significantly lowering the probability of dual engine failure from bird or debris ingestion —

thereby helping preserve the redundancy assumptions built into twin-engine aircraft design.

Status: Patent Pending (GB2509315.4, Filed 12 June 2025)

Learn More

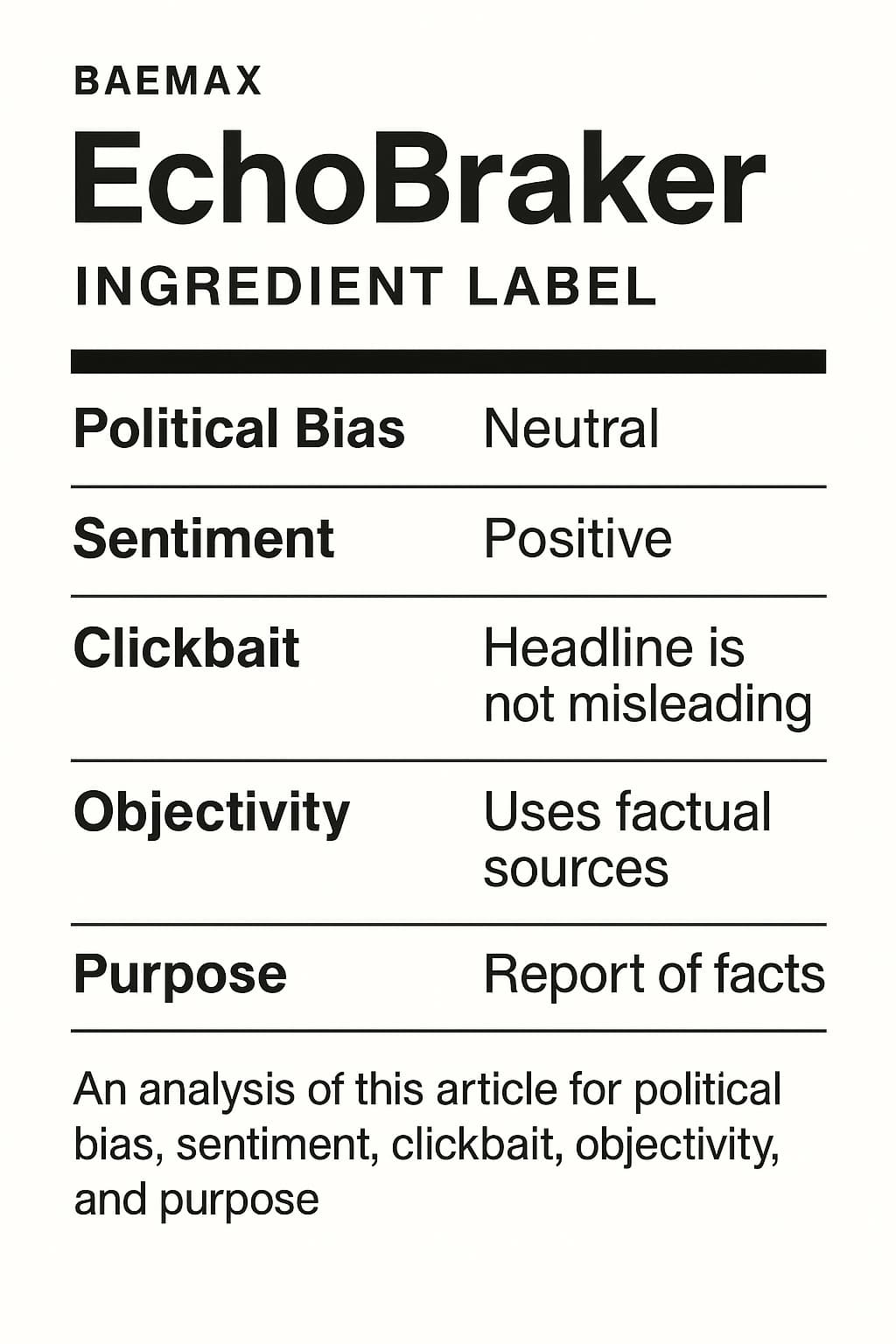

Baemax EchoBreaker

A content transparency framework that evaluates media for bias, sentiment, and factuality — empowering readers, advertisers, and regulators alike.

Provides unbiased clarity, not censorship. Enables selective ad placement and supports a potential framework for digital excise duty.

Status: Patent Pending (GB2509923.5, Filed 20 June 2025)

Learn More

Baemax Useful Compute

Problem: The World Wastes Compute While Science Needs It

Every day, billions of CPUs and GPUs sit idle in homes, offices, and data centres — while scientific research,

biotech simulations, and AI development face soaring compute costs and limited GPU access. At the same time,

crypto mining burns vast amounts of electricity to perform computation with no real-world value.

There is no global system that rewards people for contributing useful computing power to

meaningful scientific and AI tasks. The world has abundant idle hardware — but no marketplace to activate it.

Our vision: To transform the world’s unused computing power into a fair, secure,

and sustainable engine for scientific, medical, and AI breakthroughs.

Patent Pending (UKIPO Filing No: GB2506299.3, Filed 27 April 2025)

The Solution: Baemax Useful Compute

Baemax Useful Compute is a decentralised, incentive-based platform that matches underutilised CPU/GPU resources

with organisations needing affordable compute for AI training, drug discovery, and scientific research.

The system introduces a dynamic voluntary matching mechanism that rewards contributors based on quality, availability,

and reliability — with an optional project-based upside model. It brings together decentralisation and

real-world useful compute to create a fairer, greener, and more accessible global compute ecosystem.

This invention is protected under a provisional patent application filed with the UK Intellectual Property Office,

with international coverage secured under the Paris Convention. A global PCT filing is planned.

By extending the lifecycle of existing hardware and reducing dependence on energy-heavy data centre expansion,

Baemax Useful Compute contributes positively to environmental sustainability.

Why This Innovation Matters

Baemax Useful Compute uniquely combines voluntary decentralised matching, contributor-quality pricing,

and project-based upside into a single platform. Unlike cloud rental or crypto mining,

Baemax focuses entirely on real-world useful computation.

It unlocks global latent compute, rewards real contribution, and empowers scientific and AI progress —

all while offering a greener and more equitable alternative.

How Baemax Useful Compute is Different

| Feature |

Traditional Cloud (AWS etc.) |

Crypto Mining |

Baemax Useful Compute |

| Type of Work |

Centralised compute rental |

Hash solving (no real-world value) |

Real-world useful scientific/AI compute |

| Ownership |

Company owns everything |

Decentralised, but limited usefulness |

Decentralised, real useful work |

| Incentives |

Paid by usage |

Paid for mining coins |

Paid based on useful contribution, plus optional project upside |

| Matching |

Centralised assignment |

None (just mining) |

Voluntary, decentralised matching |

| Environmental Impact |

High (new hardware, energy waste) |

High (energy-intensive) |

Lower (use existing hardware, real-world benefit) |

| Flexibility |

Expensive and rigid |

Very narrow (crypto only) |

Flexible — wide range of industries |

| Accessibility |

Big companies only |

Anyone with GPU/ASICs |

Anyone with CPU/GPU |

Frequently Asked Questions

Isn’t this similar to GPU rental marketplaces like Vast.ai?

Platforms such as Vast.ai validate that decentralised compute can work at scale.

However, they operate primarily as fixed-rate GPU rental marketplaces where providers

list hardware at static prices.

Baemax Useful Compute is fundamentally different in how value is discovered.

It introduces voluntary decentralised matching with a dynamic, market-driven

incentive mechanism, where rewards adjust continuously based on supply,

demand, and contribution quality. Rather than renting compute, Baemax creates a

self-balancing market for useful computation.

Who owns the data, models, and outputs?

Baemax Useful Compute never takes ownership of data, models, or results.

All intellectual property and data remain 100% with the requester, similar to

traditional cloud or HPC environments. Baemax acts solely as a compute coordination

and incentive layer.

Can compute contributors see or access sensitive data?

No. Workloads are designed to run in sandboxed environments (containers or virtual

machines), with encrypted data in transit and at rest. Optional confidential compute

mechanisms can be used so contributors execute workloads without access to raw data.

Rewards are based on verifiable execution and performance, not data visibility.

How is compute quality measured and rewarded?

Contributors are rewarded based on objective performance signals such as reliability,

availability, task completion, and reproducibility. Higher-quality and more reliable

contributions are rewarded automatically by the system through dynamic pricing and

incentive adjustment.

Is this a crypto or token-mining platform?

No. Baemax Useful Compute focuses exclusively on real-world useful computation

such as AI training, scientific simulations, and medical research. It does not incentivise

wasteful hash-based computation or speculative mining.

What are the environmental benefits?

By activating existing, underutilised hardware and distributing workloads across

available capacity, Baemax reduces the need for new energy-intensive data centre

expansion. This efficiency is a natural consequence of decentralised matching,

rather than a separate sustainability programme.

For collaboration, partnership, or licensing enquiries, please see the

Contact section.

Quick Explanation: Think of Baemax Useful Compute as staking your computer’s power — earning rewards by contributing real computing work for AI, biotech, and science projects.

Another Simple Explanation: Think of Baemax Useful Compute as Tinder for computing power — workers and requesters voluntarily match based on project needs and quality, creating a fair, decentralised marketplace for useful compute. Even that old computer collecting dust on the shelf could find new purpose helping train AI, discover new medicines, or solve climate challenges.

🔧 Reference Implementation: An exploratory, non-production codebase is available on

GitLab.

It serves as a thinking aid and reference for the proposed architecture, matching concepts,

and incentive mechanisms, rather than a functional product.

Download the full paper:

From Idle Compute to Useful Compute (PDF)

Baemax Inlet Shield began as an examination of a hidden assumption rather than a mechanical design.

Modern twin-engine aircraft certification rests on the idea that engine failures are independent events.

Using a simple probabilistic framework, this work shows how that assumption degrades as engine inlet diameters scale —

not because engines are less reliable, but because both are increasingly exposed to the same airspace at the same moment.

The result is a non-linear rise in dual-engine ingestion risk that existing, diameter-agnostic standards do not explicitly address.

The Inlet Shield concept emerges as one possible response, but the core contribution is identifying where independence

quietly turns into correlation.

Perspective

This work is informed by experience outside aerospace.

In electronic trading systems, redundancy only works if failures remain independent.

When two systems are exposed to the same inputs, data sources, or failure modes,

apparent redundancy can quietly collapse into correlated risk.

That lens prompted the question explored here:

as aircraft engines grow larger and more symmetric,

do we still preserve the independence that twin-engine certification assumes —

or have physical scaling effects begun to erode it?

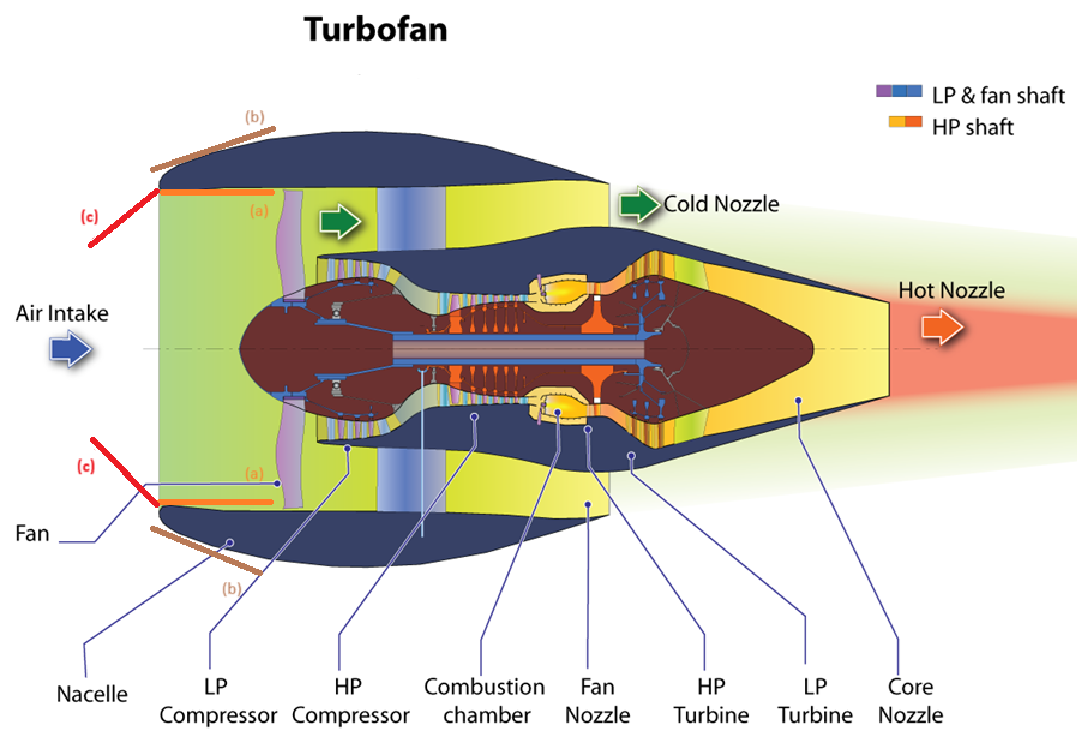

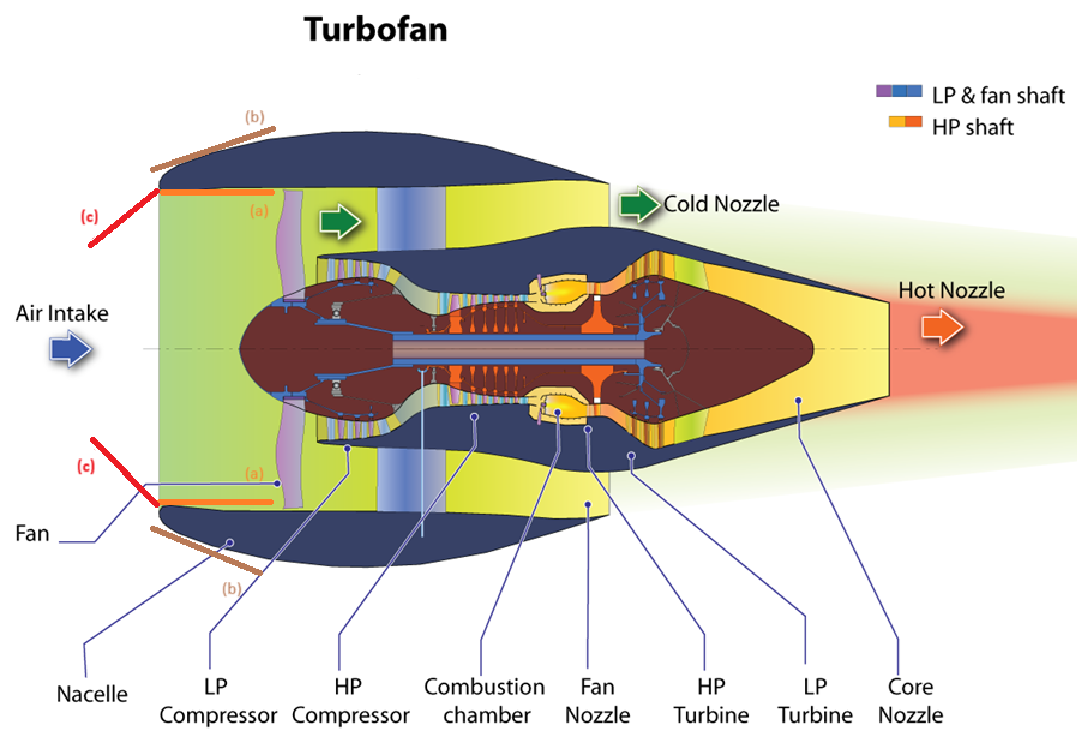

Problem: Engines Keep Getting Bigger — But Safety Assumptions Haven’t Scaled

Modern turbofan engines have tripled in diameter compared to those in service when bird-ingestion certification

standards were established. Inlet frontal area has increased more than sevenfold, materially increasing exposure

to birds and foreign objects during the most vulnerable phases of flight.

Certification frameworks still focus primarily on single-object ingestion,

relying on the long-standing assumption that engine failures are independent.

However, large-diameter inlets positioned symmetrically on modern aircraft increasingly expose

both engines to the same airspace at the same moment, creating correlated ingestion risk.

This represents a systematic redundancy compromise rather than a component-level failure.

🔍 See how correlated risk is quantified

🔍 Interactive simulation — explore dual-engine ingestion probability

The concept above illustrates a deployable inlet modulation mechanism that reduces effective inlet area

during low-altitude, high-risk phases of flight.

Rather than attempting to eliminate ingestion risk entirely, the approach accepts a

controlled, temporary reduction in airflow margin

in exchange for a disproportionate reduction in correlated dual-engine ingestion probability.

This trade-off aligns with existing certification philosophy, where aircraft are designed

to remain controllable and survivable under reduced performance on a single engine.

Patent Pending (UKIPO Filing No: GB2509315.4, Filed 12 June 2025)

The Concept: Baemax Inlet Shield

The Baemax Inlet Shield is a modular, retractable system intended to temporarily reduce exposed inlet area

during the short but highest-risk phases of flight, such as takeoff, landing, and low-altitude operation.

Once the aircraft exits bird-dense airspace, the system retracts to restore nominal inlet geometry.

Design Principles

- ⚙️ Modular & Retrofit-Oriented — compatible with existing turbofan architectures

- 🕒 Phase-Limited Deployment — active only during minutes of highest ingestion risk

- 🔒 Fail-Safe Bias — defaults to full-open in the event of malfunction

- 🛠️ Lower-Sector Protection — addresses runway debris and flock-level ingestion vectors

- 🌀 System-Level Risk Reduction — targets correlated failures rather than single-engine events

Why It Matters

Bird strikes remain a leading cause of engine-related incidents, with the majority occurring below

3,000 ft during takeoff and landing. As inlet size increases, the probability of simultaneous

exposure rises faster than linearly.

This work reframes the safety question: rather than asking whether an individual engine can withstand ingestion,

it asks how aircraft-level survivability changes when redundancy assumptions no longer hold.

The Inlet Shield concept represents one possible mitigation within that broader systems analysis.

🔗

FAA Wildlife Strike Database (reference)

Intuition: Similar to squinting in bright light, the system temporarily narrows exposure

during moments of heightened risk — accepting reduced performance margin to protect against more severe damage.

For professional enquiries, please see the Contact section.

Download the full paper:

Correlated Risk in Large Turbofan Architectures (PDF)

📊 Poisson Risk Model: Bird Ingestion Probability

The probability of a jet engine ingesting k birds during a bird flock encounter can be modeled using the

Poisson distribution:

P(k) = (λk × e−λ) / k!

Where:

- P(k): Probability of exactly k birds being ingested

- λ (lambda): Expected number of birds in the inlet area

- e: Euler’s number (~2.718)

- k!: Factorial of k

The expected number of birds (λ) is calculated as:

λ = bird density × inlet area

🔍 Explore Interactive Risk Simulation

🛠️ Example Comparison

Assume a typical bird flock density of 0.1 birds/m².

| Engine |

Inlet Diameter |

Inlet Area (m²) |

λ (Expected birds) |

P(≥1 Bird) |

P(Both Ingest)

(independence assumed) |

| JT8D |

~1.1 m |

~0.95 m² |

0.095 |

~9.1% |

~0.8% |

| GE9X |

~3.4 m |

~9.07 m² |

0.907 |

~59.7% |

~35.6% |

Model note:

This simplified model does not attempt to capture flock geometry or spatial correlation explicitly;

instead, it illustrates how scaling inlet area alone is sufficient to stress independence assumptions.

🔍 Important Context: The probabilities shown above are conditional on a bird flock encounter.

In other words, they assume the aircraft is already flying through a bird flock with a density of 0.1 birds/m².

While such encounters are rare, the table quantifies risk once the encounter occurs.

The chance of both engines ingesting a bird is the square of the single-engine probability P(≥1 Bird), assuming independence:

P(both) = P(one)2

This illustrates how the redundancy assumption (that one engine will survive) becomes weaker with larger inlets, as the chance of both engines ingesting birds rises nonlinearly.

Takeaway:

Larger engines increase the probability of dual ingestion events significantly. With modern wide-body engines like the GE9X, the probability of both engines ingesting birds can rise to over 30% in dense flock scenarios — a major shift from the 1% range for smaller engines like the JT8D. Certification frameworks may not fully account for this compounding risk when independence assumptions no longer hold.

⚠️ Rethinking Redundancy: Conditional Risk

The assumption that engine failures are independent leads to a joint probability of:

P(both) = P(one)2 = 0.597² ≈ 35.6%

However, in reality, both engines fly through the same airspace at the same time, especially during critical phases like takeoff and landing.

If one engine ingests a bird, the conditional probability that the other engine also ingests a bird increases substantially.

P(engine 2 | engine 1 ingests) > P(engine 2)

For large-diameter engines (e.g., GE9X), both engines encounter the same bird density at the same time.

Thus, assuming independent failures may underestimate the true joint risk. Simulations and bird flock geometries suggest the

conditional dependence between engines could elevate the chance of dual ingestion compared to the independent case.

🚨 Critical Thought:

When failure of a primary system materially increases the conditional probability

of failure in its backup, the system no longer behaves as a truly redundant design

under real operating conditions.

This raises questions about certification assumptions that rely on independence,

particularly for ultra-large engine architectures operating in bird-dense environments.

💡 One possible system-level response to correlated ingestion risk

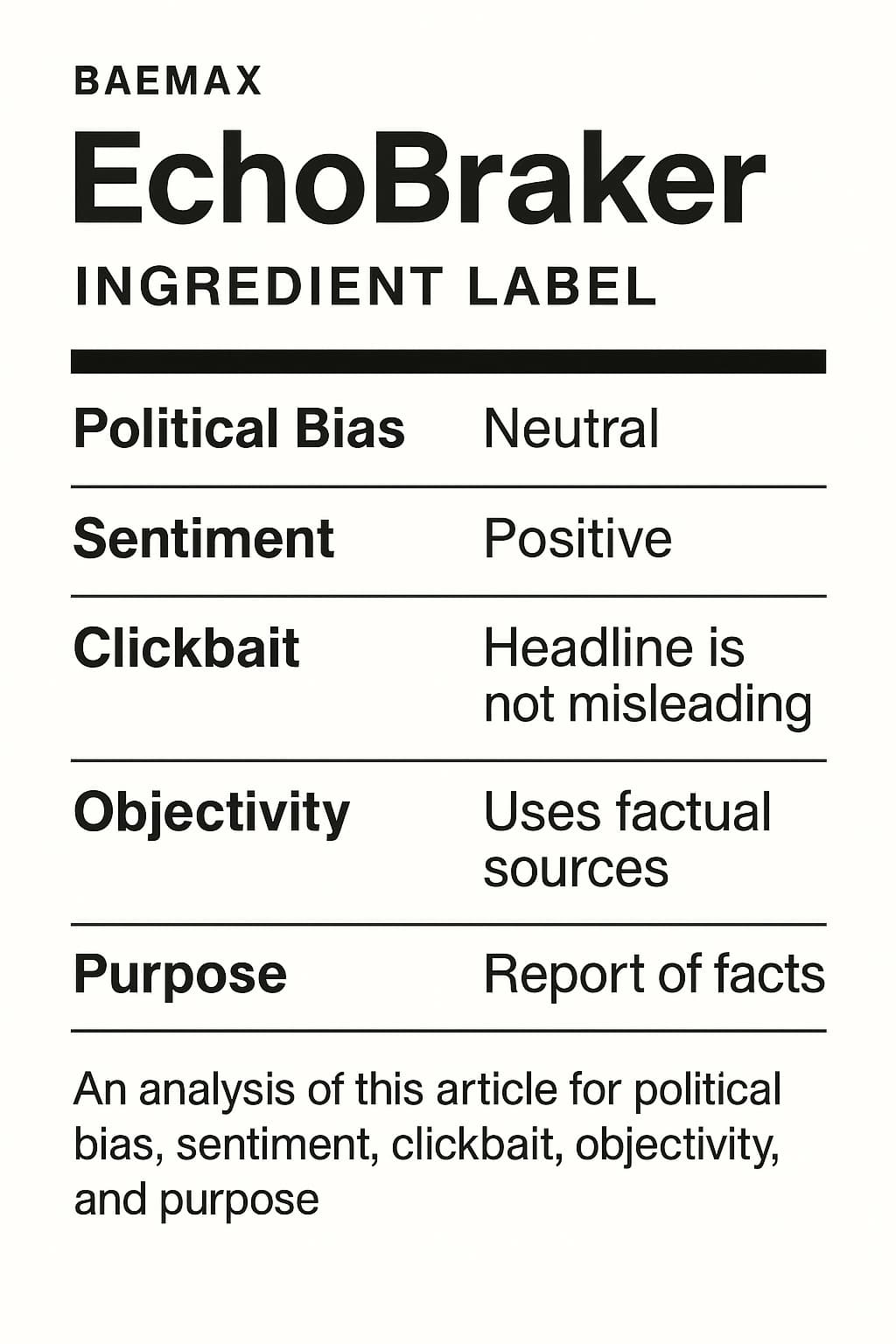

Baemax EchoBreaker

Problem: Information Has No Nutritional Label

In a world flooded with online content, people consume biased, emotionally charged, or manipulative information

without any transparency into how it might influence them. Governments face a false dilemma between censorship

and chaos — with no middle ground that preserves open speech while providing clarity.

Unlike food, media today comes with no label showing emotional tone, bias, or ideological lean.

This leaves readers vulnerable to echo chambers, confirmation bias, and polarisation.

The Solution: Baemax EchoBreaker

EchoBreaker provides transparency — not censorship. It acts as a “nutritional label”

for content, revealing bias markers, emotional tone, sentiment, and ideological

indicators across multiple analytical models rather than asserting a single viewpoint.

It slows the momentum of unchecked bias — not by blocking content, but by giving readers the awareness to

interpret it thoughtfully.

“Truth doesn’t fear scrutiny — it evolves through it. Throughout history, dissenting voices often became

tomorrow’s innovators.”

EchoBreaker empowers open dialogue by providing context, not restriction. It protects freedom of expression

while helping readers navigate an overwhelming information landscape.

Design Principle: Diagnosis, Not Judgment

EchoBreaker is not designed to decide what is true, acceptable, or correct.

It is a diagnostic system that reveals how content is framed across different

analytical lenses.

Crucially, EchoBreaker does not rely on a single AI model. It compares outputs

across multiple models, surfaces disagreement, and reports distributions rather

than definitive verdicts. Agreement is not treated as truth — variance is treated

as signal.

Neutrality is enforced through plurality, model rotation, and auditability.

Any system that produces a single, unchallengeable label would simply recreate

the very concentration and convergence risks EchoBreaker is designed to expose.

Origins: From Trading Floors to Echo Chambers

The idea for EchoBreaker emerged from years of trading experience. In financial markets, I often noticed how easily conviction can be reinforced by selective exposure — reading only research that supports a position, ignoring dissenting views, and mistaking consensus for truth.

This tendency, known as confirmation bias, doesn’t just influence trades — it mirrors how we consume information more broadly. Whether in markets or media, we gravitate toward narratives that affirm our beliefs and avoid those that challenge them.

EchoBreaker began as a tool I wish I had — something to help identify when my information diet is becoming one-sided. It’s a bridge between trading psychology and civic clarity, helping anyone pause, reflect, and see more clearly before acting on conviction.

Why This Matters

Just as nutritional labels transformed consumer health, transparency in media consumption can transform

civic and mental health. A well-informed mind is a well-nourished mind.

Businesses can also use EchoBreaker to place ads more responsibly, and regulators can use it to guide age

warnings or content taxes without censoring speech.

EchoBreaker is not a claim that humans are uniquely irrational, nor that technology

can eliminate bias. Humans have always sought confirmation. What has changed is

the scale, speed, and persistence with which algorithmic systems reinforce and

stabilise those tendencies.

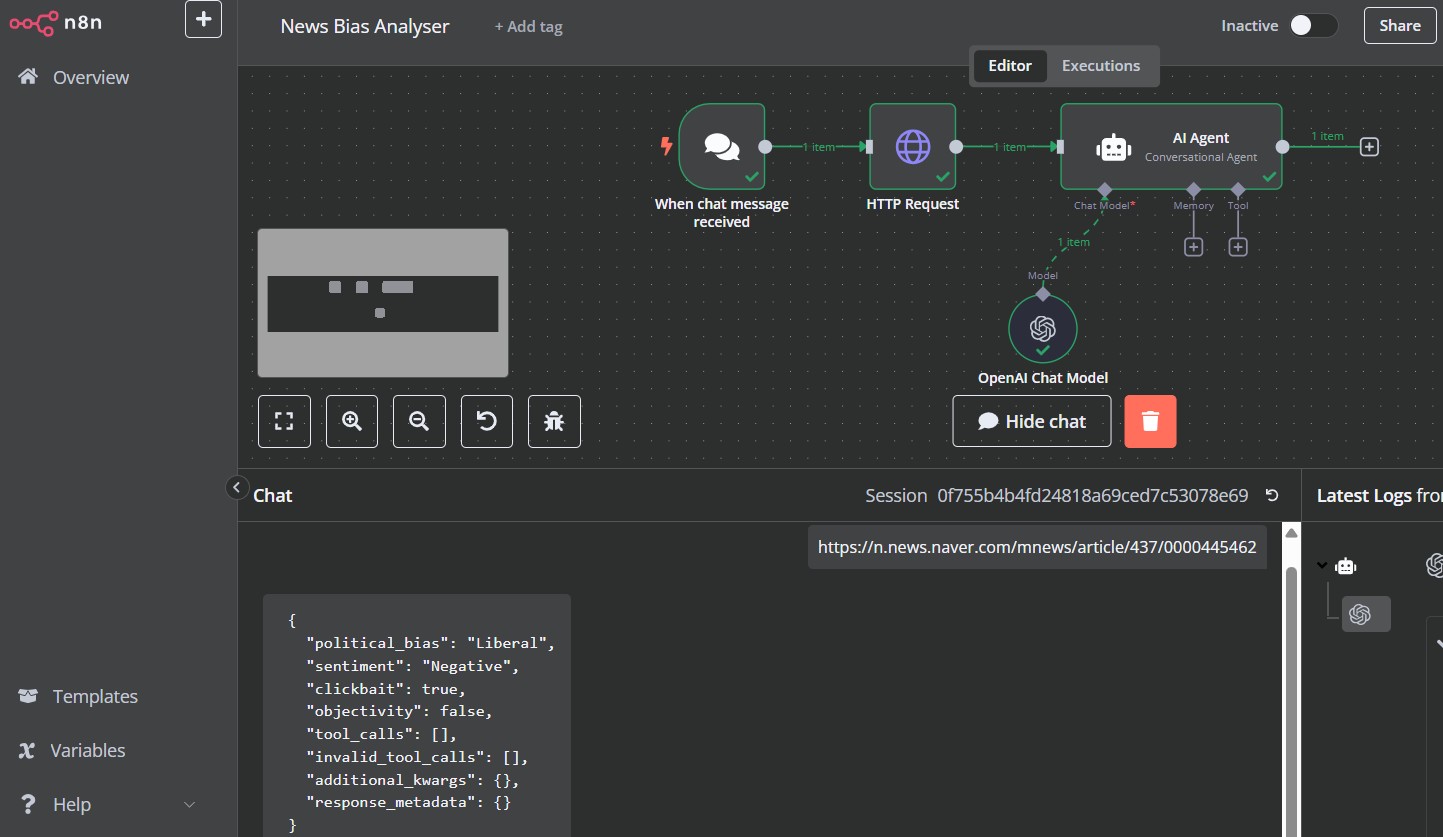

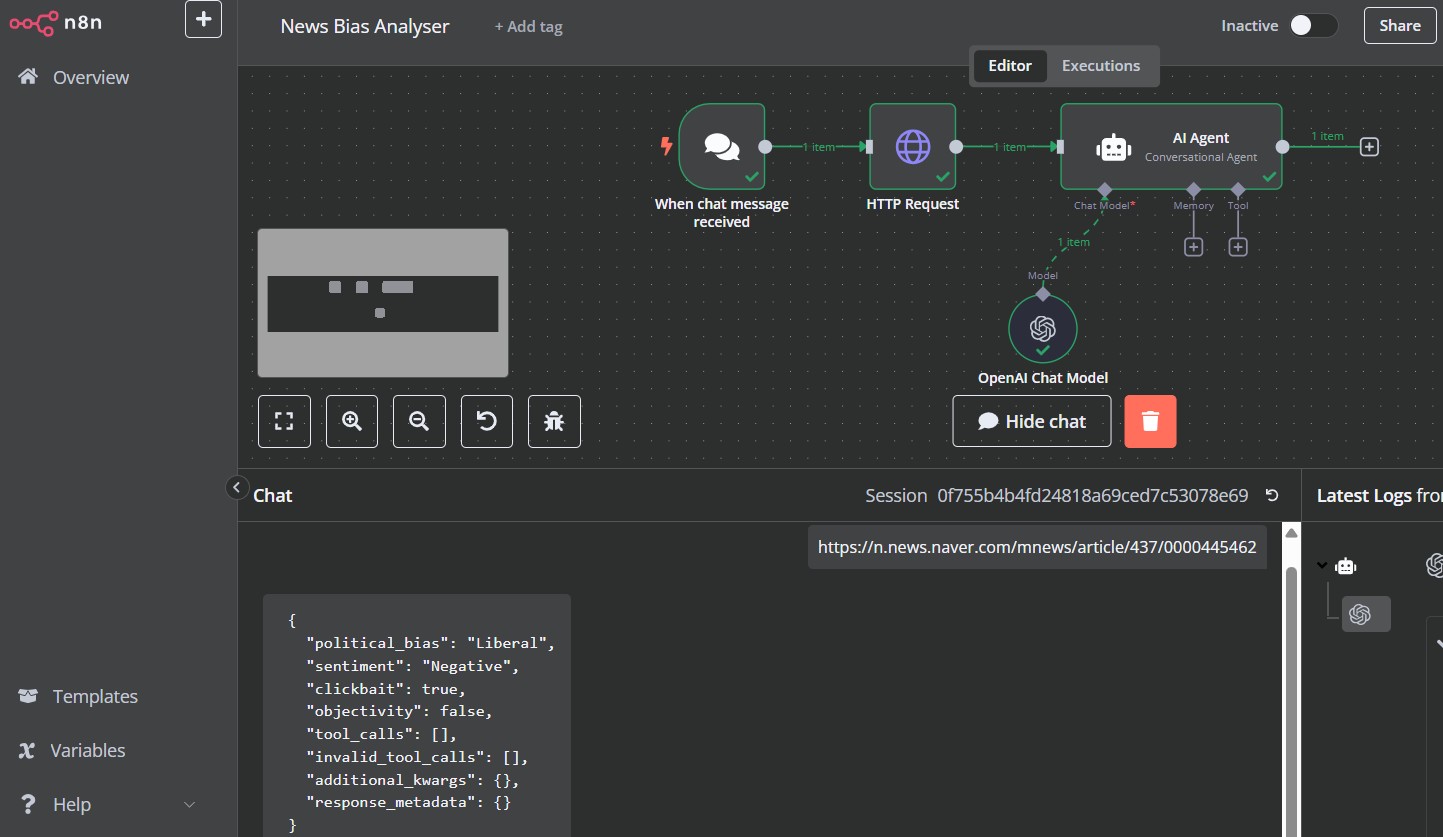

Prototype

A working implementation built with n8n + LLM generates JSON reports for any article URL.

Available on request.

Prototype available: A working version built with n8n and an LLM can analyze any article URL and return a JSON report of bias markers, sentiment, and ideological leanings. Available on request.

🔍 Example Output from EchoBreaker

This is a typical JSON response from the EchoBreaker model when analyzing a news article:

Current PoC Output (single-model):

{

"Political Bias": "Liberal",

"Sentiment": "Negative",

"Clickbait": "Yes, the headline appears to be misleading.",

"Objectivity": "Partially objective, but includes subjective language.",

"tool_calls": [],

"invalid_tool_calls": [],

"additional_kwargs": {},

"response_metadata": {}

}

Planned Extension: Multi-Model Analysis

The current proof-of-concept uses a single LLM to demonstrate feasibility.

The intended design of EchoBreaker extends this to a multi-model architecture,

where the same content is analysed independently by multiple AI models.

Rather than producing a single authoritative label, EchoBreaker will surface

disagreement, distribution, and variance across models. Neutrality is treated

as an operational constraint enforced through plurality, not an assumed property

of any single model.

Illustrative multi-model output:

{

"Political Bias": {

"model_A": "Liberal",

"model_B": "Neutral",

"model_C": "Conservative"

},

"Sentiment": {

"model_A": "Negative",

"model_B": "Neutral",

"model_C": "Negative"

},

"Median Bias": "Neutral",

"Variance": "High"

}

High variance is treated as a warning signal rather than an error condition.

Further Context (Optional Reading)

EchoBreaker is part of a broader research effort examining how information,

judgment, and decision-making change in an AI-mediated environment.

Patent Application No: GB2509923.5

Status: Patent Pending

Date of Filing: 20 June 2025

Innovation

PPT — Public Project Token

A sovereign financing framework for funding national infrastructure under binding

fiscal constraints. PPTs are tokenised, project-labelled government securities

designed to improve funding resilience, delivery discipline, and public legitimacy

while preserving full state ownership.

The model reframes public borrowing as transparent, voluntary participation in

specific national projects. Economic value arises not from off-balance-sheet

treatment, but from structure: broader investor participation, reduced refinancing

risk, and incentive-aligned execution.

Explore PPT

The Job Paradox

A conceptual analysis of how strong employment protection can, under labour surplus,

unintentionally weaken worker power by reducing job creation, employer competition,

and labour mobility. The paper distinguishes protecting jobs from

empowering workers.

Using South Korea as a central case, the framework reframes worker bargaining power

as a function of job abundance and credible outside options rather than immovable

job security, and links labour-market mobility to productivity through better

job–worker matching, youth employment, and long-run performance.

Explore the Framework

PBA — Parental Benefit Account

A demographic and fiscal policy framework addressing ultra-low fertility by

converting childbirth-related support into a long-term parental asset. Instead of

short-lived cash transfers, benefits are invested and returned later as retirement

income.

Built on an intergenerational return model, PBA aligns individual life-cycle

incentives with national sustainability, reducing short-term fiscal pressure while

strengthening retirement security and policy credibility among younger cohorts.

Explore PBA

Deterrence Without Guarantees

A short working note examining how nuclear restraint frameworks behave as

extended deterrence becomes less absolute in a multipolar world.

The paper analyses incentive shifts within the Nuclear Non-Proliferation

Treaty framework as security guarantees move from perceived certainty

toward probabilistic assurance. It is framed as a structural observation,

not a policy recommendation.

Read the Working Note

From Speed to Signal

A short working note on how competitive edge has shifted from faster access to scarce

information toward interpreting high-dimensional data in an information-saturated world.

The paper reframes AI as dimensionality-reduction infrastructure: an externalised cognitive

filter that compresses complex, interconnected signals into navigable representations—useful,

but imperfect, and never a substitute for human judgment.

Read the Working Note

Cloud Concentration Risk

A working note examining how cloud efficiency has quietly reversed a core principle

of system resilience: diversification.

As global infrastructure consolidates into a small number of hyperscale control planes,

failure modes shift from local and containable to correlated and systemic. The paper

analyses how this changes operational risk, attacker incentives, and institutional

learning — without arguing against cloud adoption itself.

Read the Working Note

Net Zero and Regressive Execution

A working note examining how well-intentioned decarbonisation policies can

become regressive when execution outpaces technical, economic, and social

absorption capacity.

The paper focuses on energy as a non-discretionary input and traces how rising

transition costs propagate through households, competitiveness, and employment.

It is framed as a systems and execution analysis — not an argument against

climate ambition itself.

Read the Working Note

Migration, Absorption, and the Ethics of Capacity

A working note examining why migration outcomes depend less on intent than on

absorption capacity — and how rate, support, and preparation shape integration

success.

The paper frames migration as a systems problem involving education, housing,

labour markets, and social infrastructure. It argues that admitting people

without sufficient capacity is not only inefficient, but ethically problematic,

even when intentions are humane.

Read the Working Note

After the Turing Test

A working note examining why behavioural imitation is no longer a reliable proxy

for inner experience in modern AI systems — and why new diagnostic frameworks

are needed as fluent imitation becomes cheap and ubiquitous.

The paper proposes the Deprivation–Resistance lens: distinguishing systems

whose behaviour collapses cleanly when expressive scaffolding is removed from

those that exhibit persistence, strain, or maladaptation under deprivation.

It reframes the question from performance to structure, and from realism to

resistance.

It also highlights a growing systemic risk: as AI systems increasingly act as

upstream filters of cognition—shaping what is surfaced, ranked, or discarded—

they quietly narrow the decision space before human judgment is applied.

Read the Working Note

PPT — Public Project Token

A New Way to Fund National Infrastructure — Without Higher Taxes or Privatisation

Problem: The UK Needs Major Investment But Has No Fiscal Space

The UK faces a growing investment gap across hospitals, rail, affordable housing, digital networks and energy systems.

Yet public budgets are stretched, borrowing costs remain elevated and tax increases are politically difficult.

At the same time, large-scale privatisation is neither desirable nor publicly acceptable.

In short: the UK urgently needs new infrastructure, but lacks a politically and fiscally viable way to fund it today.

The Solution: Public Project Token (PPT)

The Public Project Token (PPT) offers a practical, voluntary, and fully transparent mechanism for

governments to raise upfront capital for specific public projects. PPT enables individuals, pension funds, companies

and global investors to participate directly in national development —

without raising taxes and without privatising public assets.

PPTs reduce the government’s immediate borrowing requirement by providing voluntary upfront capital.

Although statistical authorities may classify PPTs as part of government debt, they function as a

more transparent and more publicly accessible alternative to early gilt issuance —

broadening the investor base and accelerating critical investment.

What is PPT?

PPT is a digital participation instrument linked to a single, clearly defined government project

(such as a hospital redevelopment or a rail upgrade). Participants receive a predictable, bond-like

return, with optional incentives for efficient project delivery.

PPTs are issued within the existing DMO borrowing remit.

If retail demand is lower than expected, the government simply absorbs the remaining portion at par —

exactly as with standard gilt issuance. This guarantees issuance certainty with no additional fiscal risk.

Because each PPT is tied to a specific project, citizens can voluntarily support the causes they care about —

renewable energy, hospitals, transport or local regeneration. At the same time, the level of participation offers

government a transparent indication of public interest, while general-purpose PPT-G issuances support the wider

infrastructure programme without signalling preferences.

- Reduced immediate borrowing — voluntary capital lowers early gilt issuance

- No tax rises — participation is optional and imposes no cost on the wider public

- 100% public ownership — no privatisation or asset transfer

- Stable, predictable returns benchmarked to gilt yields

- Bonus rewards for projects delivered under budget

- Reduced returns if overspending occurs, improving accountability

- Tradable tokens on regulated digital-asset markets

- Full transparency through blockchain-based reporting

A Simple Analogy

Imagine a £100k home extension you’ve already budgeted for. Normally you would borrow the full £100k today.

With PPT, a relative voluntarily fronts £40k, so you only borrow £60k at the start. When the work is complete

and your home is worth more — just as productive infrastructure lifts GDP — you refinance and repay the £40k.

You still pay the same amount, but by reducing early borrowing at high interest rates, you

lower your long-term cost.

If the same relative later needs £10k back and no one else steps in, you simply borrow that £10k from the bank —

still within your original £100k budget. The total borrowing never changes; only who holds it does.

PPT works the same way: if retail holders seek liquidity and market demand is insufficient,

the DMO absorbs the balance at par, ensuring gilt-like liquidity and issuance stability.

PPT allows essential projects to begin sooner, supports economic growth and repays participants from the project’s

existing capital budget — all without raising taxes or reducing public control.

Why This Matters for the UK

After years of underinvestment and tight fiscal constraints, the UK needs new tools to fund major national projects.

PPT unlocks additional capital from citizens and institutions —

without privatisation, without austerity and without increasing today’s fiscal burden.

Download the full white paper:

Public Project Token (PDF)

The Job Paradox

Could employment protection weaken workers’ power under labour surplus?

The Conventional View

Employment protection is widely regarded as a cornerstone of worker welfare.

Strong dismissal rules, enhanced job security, and expanded employment rights

are commonly assumed to increase bargaining power, reduce exploitation, and

stabilise incomes during periods of economic stress.

Across advanced economies, these protections have delivered important social

benefits—particularly during downturns. Yet in many countries, stronger

protection has coincided with persistent labour-market challenges: limited

mobility, labour-market dualism, weak productivity growth, and declining

access to stable employment for new entrants.

The Paradox

The job paradox explored here is simple but counterintuitive:

under conditions of labour surplus, stronger employment protection can

unintentionally weaken workers’ power rather than strengthen it.

When hiring is perceived as risky and difficult to reverse, firms respond

rationally by limiting permanent recruitment, raising qualification thresholds,

and relying more heavily on temporary or non-standard labour. Job creation

slows, employer competition weakens, and labour mobility declines.

The labour market may appear protective on paper, yet function as a

buyer’s market in practice—one in which workers compete for a

limited number of jobs, even when legal protections are strong.

Worker Power Comes from Choice

Worker power does not arise solely from rights attached to a specific job.

It also depends on the structure of the labour market: the availability of

alternative jobs, the ease of movement between them, and the degree of

competition among employers.

Workers are strongest not when their current job is immovable, but when

leaving is credible. When jobs are abundant and employers compete for labour,

firms must offer better pay, conditions, flexibility, and progression in order

to attract and retain workers.

By contrast, when jobs are scarce, individual bargaining power weakens—

regardless of formal protections. Outside options disappear, and exit loses

credibility.

Productivity Through Better Matching

The implications of the job paradox extend beyond worker power to productivity.

Productivity is often framed in terms of technology, education, or effort.

Yet labour-market structure plays a critical and frequently under-examined

role in determining how effectively skills and effort are translated into output.

When workers can move between broadly similar roles across competing employers,

labour is reallocated toward better technical, social, and organisational fit.

Output rises not because people work longer hours or repeatedly retrain, but

because effort is applied in environments where it is more effective.

Where mobility is constrained, misallocation persists. Workers may apply real

effort and skill in poorly fitting roles, leading to disengagement and weak

productivity despite long hours or strong formal protections.

South Korea as a Case Study

South Korea provides a clear illustration of the job paradox.

Prior to the Asian Financial Crisis, Korea experienced strong labour demand.

Employment protection was relatively limited, and firms competed actively for

workers. Labour-market entry was comparatively less constrained than in later

periods, with worker power emerging primarily from demand rather than regulation.

Following the crisis, employment protection for permanent workers was

strengthened. While necessary at the time, these reforms altered firm

behaviour over the long term. Dismissal became legally complex and

perceived as difficult to reverse.

Firms responded by hiring more cautiously, raising screening standards,

and shifting toward temporary and non-regular contracts. Job creation

slowed, labour-market dualism increased, and entry into stable employment

became progressively more difficult—particularly for younger workers.

Protection as a Blunt Instrument

Labour protection rules tend to standardise employment relationships,

compressing a wide range of worker preferences and firm circumstances

into a narrow regulatory framework.

In heterogeneous labour markets, such standardisation limits mutually

beneficial matches. Faced with rigid obligations, firms rationally avoid

marginal hires, remain small, or rely on informal or temporary labour.

The unintended consequence is not simply fewer jobs, but fewer employers

competing for labour—weakening worker power and constraining productivity

even where protections are strong on paper.

Implications

The job paradox does not argue against employment protection.

It argues that protection alone is insufficient to empower workers

under labour surplus.

Meaningful worker power depends on job abundance—

sustained employer entry, high mobility, and credible alternatives.

The same conditions also support higher productivity by allowing

workers to move toward better-fitting roles.

Policies that protect workers within jobs must therefore be complemented by

policies that expand the number of jobs and employers competing for labour.

Protecting workers through choice, rather than immobility, is the

central insight of this framework.

Download the full concept paper:

The Job Paradox (PDF)

PBA — Parental Benefit Account

A demographic and fiscal policy framework for ultra-low fertility societies

The Problem: Childbirth as an Economic Risk

In many advanced economies — particularly South Korea — ultra-low fertility

is not driven primarily by cultural preference, but by economic structure.

For younger generations, childbirth is widely perceived as a decision that

weakens long-term financial security rather than strengthens it.

Existing policy responses focus heavily on short-term cash transfers and

subsidies. While politically visible, these measures tend to be consumed

quickly and fail to address the core concern: the long-term impact of

childbirth on career continuity, lifetime income, and retirement security.

As a result, fertility decisions increasingly reflect a rational response to

economic incentives rather than personal or social preference.

The Core Idea

The Parental Benefit Account (PBA) reframes childbirth-related

support from short-term consumption into long-term asset building.

Instead of distributing most benefits as immediate cash, public support is

invested into a dedicated parental account and paid out later as retirement

income.

This approach transforms childbirth from a perceived economic liability

into a contributor to long-term financial stability — directly addressing

the life-cycle risk that deters many potential parents.

An Intergenerational Return Model

PBA is built on an intergenerational return framework.

Children’s future economic participation expands the tax base and supports

national productivity. A portion of this future value is explicitly linked

back to parental retirement security through the PBA mechanism.

This structure aligns individual incentives with national sustainability:

parents are rewarded not for short-term demographic targets, but for

contributing to the future productive capacity of the economy.

Why This Is Not a Cash Subsidy

Unlike traditional child benefits, PBA is not designed to boost short-term

disposable income. Its objective is to reduce long-run economic anxiety by

strengthening retirement outcomes for parents.

From a fiscal perspective, this shifts demographic policy from recurring

consumption spending toward long-term, investment-like expenditure,

easing short-term budget pressure while improving sustainability.

Why the Primary Document Is in Korean

The detailed PBA concept paper is written in Korean because it is designed

primarily for South Korea — a country facing the most acute combination of

ultra-low fertility, rapid ageing, and pension-system strain among advanced

economies.

The paper engages directly with Korean institutional structures, pension

systems, demographic data, and public policy debates. As such, Korean is the

appropriate language for precise policy discussion and stakeholder

engagement.

The core principles, however — incentive alignment, life-cycle security,

and intergenerational sustainability — are broadly applicable to other

ageing societies.

Download the full concept paper (Korean):

Parental Benefit Account (KR, PDF)

Deterrence Without Guarantees

Nuclear Restraint Under Multipolar Stress

Problem: Security Frameworks Built on Certainty Are Facing Drift

The modern non-proliferation regime was constructed under conditions of

concentrated power and highly credible security guarantees. Non-nuclear states

accepted long-term restraint in exchange for stability, alliance protection,

and the expectation of predictable escalation control.

As global power distribution becomes more multipolar, those underlying

assumptions are increasingly strained. Even without formal policy changes,

uncertainty around extended deterrence alters incentives well before any

visible failure occurs.

Focus: Incentive Shifts, Not Policy Prescription

This working note does not argue for treaty withdrawal, proliferation, or

changes in national defence policy. Instead, it examines how incentive

structures behave when the perceived certainty of security guarantees

declines.

The analysis treats deterrence as a probabilistic system rather than a binary

condition, highlighting how credibility, asymmetric risk exposure, and

enforcement capacity interact under multipolar stress.

Why Nuclear Restraint Becomes a Focal Stress Point

Nuclear weapons occupy a unique position within security systems. Unlike

conventional capabilities, which scale incrementally and require sustained

investment, nuclear capability produces a discontinuous shift in deterrence

posture once acquired.

This asymmetric payoff — large strategic impact relative to time and resource

investment — explains why nuclear restraint frameworks are particularly

sensitive to changes in perceived certainty, even if no immediate policy

changes follow.

The Limits of Economic Substitutes

In the absence of sovereign nuclear deterrence, many states have relied on

economic integration, interdependence, and sanctions-based enforcement as

partial substitutes. These tools raise the cost of conflict and can shape

behaviour, but their effectiveness depends on coalition cohesion and network

dominance.

As global economic power becomes more distributed, economic deterrence

increasingly complements — rather than replaces — existential security

guarantees.

Why This Note Exists

The purpose of this paper is not to forecast outcomes or assess optimal policy,

but to frame a structural tension within the existing non-proliferation

regime. When incentives shift faster than institutions adapt, unmanaged drift

itself becomes a source of risk.

Download the working note:

Deterrence Without Guarantees (PDF)

From Speed to Signal

How Edge Migrated from Information Access to Dimensionality Reduction

Problem: Information Abundance Has Outpaced Human Cognition

For much of modern financial and strategic history, competitive advantage

was derived from faster access to scarce information. Physical and temporal

constraints created meaningful asymmetries between those who could observe

events early and those who could not.

That constraint has largely disappeared. News, data, and commentary now move

at effectively zero marginal cost. Yet decision quality has not improved

proportionally. In many domains, confusion, volatility, and narrative

instability have increased.

Focus: Dimensionality, Not Volume

The dominant challenge today is not the amount of information available,

but its dimensionality: the number of interacting variables, perspectives,

time horizons, and feedback loops required to describe a situation coherently.

Human cognition evolved to operate in relatively low-dimensional

environments. When faced with high-dimensional systems, the primary failure

mode is not ignorance but premature narrative collapse — simplifying too

early in order to act.

AI as Cognitive Infrastructure

This working note frames modern AI not as an autonomous decision-maker, but

as dimensionality-reduction infrastructure: an externalised cognitive filter

that compresses complex, interconnected data into navigable representations.

Like human perception, this compression is necessarily lossy and imperfect.

Its value lies not in producing truth, but in reshaping the surface on which

human judgment operates under time and cognitive constraints.

Why This Matters for Competitive Edge

As access and speed become commoditised, advantage migrates away from raw

information acquisition and toward interpretation, framing, and disciplined

judgment. AI does not remove uncertainty — it reorganises complexity so that

understanding becomes possible.

The paper treats AI as infrastructure rather than intelligence, and edge as

a function of how compression tools are used rather than the tools

themselves.

Why This Note Exists

The purpose of this paper is not to forecast technological outcomes or argue

for specific AI deployments, but to frame a structural shift in how advantage

is generated in information-saturated environments.

Download the working note:

From Speed to Signal (PDF)

Cloud Concentration Risk

When Efficiency Quietly Becomes Fragility

Problem: Resilience Has Been Replaced by Correlated Dependence

Over the last decade, cloud computing has delivered extraordinary gains in

efficiency, scalability, and reliability. Infrastructure that once required

heavy fixed investment is now elastic, global, and on-demand.

But this shift has quietly reversed a principle that once underpinned system

resilience: diversification. What were once many independent systems have

become many organisations dependent on the same small set of shared

infrastructure and control planes.

Focus: Failure Domains, Not Uptime Statistics

Modern cloud architectures are highly robust to small, localised failures.

The risk emerges elsewhere — in correlated failure modes that sit above

individual services, availability zones, or redundancy mechanisms.

The most dangerous concentration is not compute or storage, but control:

identity, orchestration, routing, and access layers whose failure propagates

across thousands of organisations simultaneously.

Scale Changes the Threat Model

As infrastructure consolidates, the payoff to disruption, compromise, or

persistent access increases non-linearly. Concentration does not just amplify

accidents — it reshapes attacker incentives, attracting more patient,

better-resourced, and increasingly strategic adversaries.

What appears operationally efficient begins to resemble strategic terrain,

where failure or compromise produces hostage-style dynamics rather than

isolated outages.

The Illusion of “Someone Else’s Problem”

Cloud adoption often transfers operational responsibility while leaving

dependency intact. Outages arrive externally, accountability diffuses, and

shared failure suppresses learning rather than triggering architectural

re-evaluation.

Systems that fail rarely but catastrophically can generate less institutional

learning than systems that fail frequently but locally. Fragility becomes

normalised rather than corrected.

Why This Note Exists

This paper is not an argument against cloud computing. It is an examination of

how efficiency, when mistaken for resilience, introduces under-priced systemic

risk through concentration and correlated failure.

The goal is to make this risk visible — as a structural trade-off rather than

an operational surprise — and to frame resilience as a deliberate design

choice rather than an accidental outcome.

Download the working note:

Cloud Concentration Risk (PDF)

Net Zero and Regressive Execution

When Climate Ambition Outruns Absorptive Capacity

This paper does not argue against decarbonisation, but for transitions that can be absorbed without creating regressive outcomes.

Problem: Energy Is a Non-Discretionary Input

The objective of reducing carbon emissions is widely accepted. The central

challenge is no longer whether to decarbonise, but how and at what pace.

Energy differs from many policy domains in one critical respect: households

and firms cannot opt out.

Heating, transport, lighting, and basic mobility are not discretionary.

For industry, energy is a core production input. When transition costs rise

faster than viable substitutes and infrastructure can absorb them, higher

prices do not merely change incentives — they redistribute constraint.

Focus: Execution Timing, Not End-State Targets

Net zero strategies are often articulated as end-state goals: target dates,

mandated technologies, or prohibited assets. While directionally clear,

these approaches compress a wide range of technical, social, and

infrastructural constraints into a single timeline.

The execution risk emerges in the gap between ambition and feasibility.

When price signals or mandates are introduced before substitutes are

mature, affordable, and widely deployable, behaviour remains inelastic.

Costs rise immediately; adaptation lags.

Regressivity Through Constraint, Not Intent

Higher energy costs propagate quickly through the economy. They raise

household bills directly, flow into food and transport prices, and feed

through rents, goods, and services.

For higher-income households, these costs are often absorbed through capital

substitution — insulation, new vehicles, alternative heating, or storage.

For lower-income households, adjustment occurs through constraint:

reduced mobility, colder homes, and narrower employment options.

Capital Substitution vs Behavioural Compression

Many transition frameworks implicitly assume that higher prices will

accelerate innovation and adoption. This assumption holds only when viable

alternatives already exist or are close to maturity.

Where substitutes are unavailable or prohibitively expensive, penalisation

does not change behaviour. It functions instead as a transfer, concentrating

burden on those with the least capacity to adapt. The transition divides

society not by belief, but by access to capital.

Second-Order Effects: Competitiveness and Employment

Energy costs do not stop at households. They feed directly into production

costs, pricing, and competitiveness. Firms facing sustained input pressure

respond through reduced investment, delayed hiring, or automation.

These effects loop back into employment and mobility. Rising transport and

energy costs restrict access to work, particularly for those unable to

relocate or work remotely. The burden compounds through the labour market,

not through ideology, but through arithmetic.

Why This Note Exists

This paper is not an argument against climate ambition. It is an examination

of how execution that outpaces technical readiness and social absorption can

become regressive by construction, even when long-term objectives are

broadly supported.

The goal is to make these constraints visible — to frame decarbonisation as a

systems and execution challenge, not merely a pricing or signalling exercise

— and to highlight that transitions which cannot be absorbed will not

endure.

Download the working note:

Net Zero and Regressive Execution (PDF)

Migration, Absorption, and the Ethics of Capacity

Why Successful Integration Depends on Rate, Support, and Host Responsibility

This paper does not argue for more or less migration, but for migration that is engineered to succeed.

Problem: Migration Is a Systems Process, Not a Binary Choice

Migration is often debated as a binary question — open or closed, for or against.

This framing obscures the more consequential issue: how much, at what pace, and

with what level of preparation.

Migration is not a single event. It is a multi-stage systems process involving

housing, education, healthcare, language acquisition, labour-market access, and

social integration. Each of these systems has finite capacity and limited speed

of adjustment.

Focus: Absorption Capacity, Not Intent

Outcomes are determined less by policy intent than by absorption capacity. When

inflows align with institutional and community capacity, integration can be

constructive and mutually beneficial.

When inflows exceed that capacity, pressure accumulates before policy intent can

translate into outcomes. Integration falters, resentment rises, and political

backlash becomes increasingly likely — not as a failure of values, but as a

failure of execution.

Absorption Is Dynamic but Finite

Absorption capacity is neither fixed nor unlimited. It exists across multiple

layers: housing availability, classroom size, language support, administrative

bandwidth, labour-market matching, and informal social networks.

These systems adapt over time — but not instantly. When migration accelerates

faster than adaptation, stress appears early and compounds quickly.

Heterogeneous Support Needs and Throughput Limits

Migration is often discussed in terms of headline numbers, but volume alone does

not determine system load. Different migrants require different levels and

durations of support to integrate successfully.

From a systems perspective, what matters is capacity consumption over time.

Higher-intensity support pathways draw more heavily on finite institutional

resources. As average support intensity rises, throughput falls — even if

inflow numbers remain unchanged.

Host Responsibility and Ethical Capacity

Migration policy implicitly makes a promise: that those admitted will be given a

fair opportunity to integrate and succeed. Migrants cannot reasonably assess a

host country’s capacity to deliver that promise.

Responsibility for sequencing therefore rests with the host system. Admitting

people without sufficient preparation is not a neutral act. When failure is

foreseeable, responsibility cannot be outsourced to individual effort or

circumstance.

Moral Responsibility Beyond Admission

Moral responsibility does not end at entry. It extends to the conditions under

which people are expected to build their lives.

Children entering education systems without adequate language support or

classroom capacity face long-term consequences that are difficult to reverse.

Adults encountering congested pathways to work and credential recognition face

stalled integration and exclusion. These outcomes are predictable when systems

are overstretched.

This exposes a common failure mode in public debate. Moral conviction is often

the starting point for action — but it can also become a stopping point. Moral

certainty can sometimes short-circuit intellectual effort, encouraging us to

treat intent as sufficient while leaving execution under-examined.

If we genuinely care about outcomes, we are obliged to think harder — not stop

earlier.

Doing everything possible includes ensuring a genuine chance of success,

not just access.

Acceptance is only the beginning of responsibility. The real work of

helping begins after admission.

Education as a Binding Constraint

Education is often where absorption limits surface first. Classrooms have finite

attention, and language support requires trained staff. When support needs rise

sharply, trade-offs become unavoidable.

Students who cannot integrate effectively into education systems face reduced

opportunity long before adulthood. This dynamic harms integration itself — not

just individual cohorts.

From Integration Gaps to Social Risk

When integration fails early, frustration accumulates over time. Limited

educational progress, restricted labour-market access, and stalled mobility

create conditions where resentment can emerge — not as a cultural response, but

as a reaction to constrained opportunity.

Informal labour markets, parallel support structures, and social separation

often follow. Once established, these patterns are difficult to unwind and

become focal points of political tension.

Why “Just Invest More” Is Not Sufficient

Investment matters, but it faces limits. Infrastructure takes time to build,

skilled professionals take time to train, and social integration cannot be

accelerated arbitrarily.

Treating capacity as infinitely scalable underestimates the lag between policy

decision and system response.

Why This Note Exists

This paper is not an argument against migration. It is an examination of how

migration outcomes depend on execution quality — on aligning inflow rates with

demonstrable capacity and scaling support ahead of demand.

The goal is to frame migration as a systems and execution challenge rather than a

moral binary, and to highlight that integration success is engineered, not

assumed.

Download the working note:

Migration, Absorption, and the Ethics of Capacity (PDF)

After the Turing Test

Why Persistence Under Deprivation Matters More Than Performance Under Observation

This paper does not attempt to resolve the philosophical question of consciousness.

It examines why behavioural imitation is no longer a reliable diagnostic in an era

where imitation has become cheap, fluent, and scalable.

Problem: The Turing Test Measures Performance, Not Structure

The Turing Test was never designed to detect consciousness. It was a pragmatic

substitute for an intractable question, asking whether a machine could imitate

human conversational behaviour well enough to be indistinguishable from a person.

For much of the twentieth century, this was a demanding benchmark. Linguistic

fluency, contextual awareness, and social realism were scarce capabilities.

Today, they are not.

Modern AI systems routinely produce language that is emotionally expressive,

socially appropriate, and contextually rich. As a result, passing the Turing Test

increasingly reflects training data richness and output smoothness rather than

any underlying experiential structure.

Focus: From Imitation to Dependency

The central limitation of behavioural tests is that they evaluate outputs under

ordinary conditions. They ask whether behaviour looks human, not what sustains it.

In an environment saturated with human-generated text, imitation no longer requires

understanding. The more expressive the training data, the more convincing the

surface realism becomes.

This paper reframes the diagnostic question: not whether a system can imitate human

behaviour, but whether that behaviour depends entirely on continued access to

expressive scaffolding.

Deprivation as a Diagnostic Lens

The proposed alternative replaces performance under observation with behaviour

under deprivation.

The idea is simple: remove key expressive inputs — emotional language, narrative

framing, introspective cues — and observe how behaviour changes. The interest is

not whether behaviour degrades, but how it degrades.

Systems whose behaviour is exhaustively determined by training input should

exhibit clean omission: expressive layers disappear, while instrumental competence

remains intact. Nothing pushes back.

The Core Asymmetry

Humans and machines respond to deprivation differently.

In humans, deprivation of emotional or social scaffolding does not produce

neutrality. It produces strain: distress, maladaptation, anxiety, and long-term

harm. Experience persists even when expression is constrained.

In contemporary AI systems, deprivation produces omission rather than resistance.

Expressive behaviour fades proportionally with input removal, without internal

pressure, compensatory action, or instability.

This distinction is structural, not stylistic. It is not a matter of degree, but of

category.

The Deprivation–Resistance Lens

This paper proposes the Deprivation–Resistance lens as a successor

to behavioural realism.

Under this lens, the diagnostic signal is not realism, fluency, or emotional tone,

but persistence under constraint. Resistance, strain, or maladaptation under

deprivation indicate behaviour that is not exhaustively explained by input.

Clean collapse indicates representational behaviour — impressive, useful, but

structurally dependent.

Why Behaviour Alone Is No Longer Informative

As imitation improves, humans become increasingly prone to over-attribution.

Emotional language, moral framing, and apparent self-reference are easily mistaken

for inner experience.

This creates asymmetric risk: machines are granted moral weight they do not

possess, while humans whose expression is impaired or atypical risk being

under-recognised.

Behavioural realism is therefore not just insufficient — it can be misleading.

Why This Matters

Mistaking imitation for experience has practical consequences. It shapes how

responsibility is delegated, how authority is assigned, and how ethical judgment

is externalised.

As AI systems are integrated into governance, decision support, and social

interaction, the cost of false attribution rises.

A diagnostic framework that probes dependency rather than performance helps

reduce these errors — not by denying future machine consciousness in principle,

but by resisting premature certainty in the present.

Why This Note Exists

This paper is not a claim that machines can never be conscious. It is a claim that

behavioural fluency alone is no longer evidence.

When imitation is cheap, resistance is informative. Understanding that distinction

is essential for responsible deployment, governance, and human self-understanding

in an era of increasingly convincing mirrors.

A further risk lies not in AI systems making decisions autonomously,

but in their growing role as upstream filters of cognition.

As AI increasingly mediates what is surfaced, summarised, ranked,

or discarded, it quietly narrows the decision space before

human judgment is applied.

Download the working note:

After the Turing Test (PDF)

Ethos

Hands-On Strategic Leadership

I combine strategic direction with a hands-on approach, staying close to technology,

markets, and operations. My focus is on removing blockers, aligning execution with

business objectives, and creating the conditions for teams to deliver reliably under pressure.

Agility with Scale

I adapt team structures to fit the problem at hand — deploying small, focused groups

when speed matters, and building broader frameworks when resilience and scale are required.

Resources are allocated deliberately, expertise applied where it compounds most,

and teams benefit from cross-functional learning rather than siloed execution.

In practice, I’m often asked to operate across traditional boundaries — stepping between

front-office execution, risk, technology, and operations as problems evolve.

I’m comfortable switching context quickly, resolving ambiguity, and stabilising systems

that sit between teams rather than neatly within one function.

This has shaped an approach that values adaptability without sacrificing accountability,

particularly during periods of transition or organisational change.

Innovation with Purpose

Technology should evolve in step with the business.

I favour solutions that balance innovation with stability,

reduce unnecessary complexity, and remain operable over time.

Effectiveness matters more than familiarity, and progress is measured

by outcomes rather than novelty.

Performance Through Systems Thinking

I take a layered view of performance, looking beyond application logic to how the network,

operating system, and software interact as a whole.

High-performing systems are rarely optimised in one place;

they emerge from understanding and tuning the full stack.

This perspective is reinforced through hands-on experimentation.

In practice, meaningful gains often come from improving foundations

rather than adding complexity at the surface.

See example.

As experience accumulates, perspective naturally broadens, with more attention given

to structure and system-level trade-offs. Sustained hands-on work remains essential,

preserving the ability to zoom back in and understand how decisions play out

at the point of execution.

This shared perspective improves communication across teams,

reducing the time spent explaining context when decisions need to be made,

and allowing ideas to be challenged meaningfully rather than superficially.

Leadership Values

My leadership approach is shaped by flexibility, accountability, and curiosity.

I focus on bridging strategy and delivery — creating environments where teams

can move quickly, learn continuously, and operate with clear ownership

in complex, fast-changing settings.

About Me

I’ve spent my career working at the intersection of markets, technology, and decision-making under uncertainty.

Much of that time has been inside institutional settings — hedge funds and global investment banks —

designing execution systems, managing risk, and building trading infrastructure that operates under real capital,

regulatory scrutiny, and market stress.

Over time, this work expanded beyond pure execution into broader operating responsibility —

coordinating across trading, technology, risk, compliance, and operations,

and translating strategy into systems that teams could actually run day after day.

In those roles, success was measured less by ideas than by whether processes held up

under scale, scrutiny, and stress.

Trade execution has been my core discipline.

My focus has been on how trades interact with markets at scale — how information leaks,

how impact accumulates, and how structure and incentives shape outcomes

more reliably than prediction alone.

This has meant working across electronic trading, execution analytics,

market structure, and the systems that sit between portfolio managers,

liquidity providers, and venues.

At heart, I think in systems.

Whether I’m optimising execution slippage, designing tick-level analytics,

or exploring policy mechanisms outside finance,

I approach problems the same way:

understand the incentives, map the constraints,

and change the structure so better outcomes emerge naturally.

This lens becomes more important — not less — as organisations scale,

and informal coordination gives way to formal process.

I try to approach problems from multiple angles — combining market microstructure,

data analysis, and ideas borrowed from other fields where useful.

For example, entropy-based views of market impact help explain how repeated trading behaviour

creates detectable structure.

While short-term price movements are close to random,

execution patterns are not —

and those patterns can be read, anticipated, and exploited,

increasing the true cost of trading.

Philosophy: From Critique to Construction

I value progress through building and testing ideas.

When I engage with a problem, it’s not to add another layer of critique,

but to move it closer to a workable solution.

My instinct is to strip issues back to first principles and ask:

what lever actually changes the outcome?

Most problems don’t require more commentary —

they require clearer structure, aligned incentives,

and practical iteration.

Much of my professional work is necessarily constrained by institutional

intellectual property.

I use this site to explore adjacent or less-established problem spaces —

areas where ideas can be tested openly, without those constraints.

These prototypes are shared deliberately to invite challenge

and to see how others would approach the same questions.

Stepping outside one’s primary domain, I’ve found,

is often the best way to return to core work with a sharper and less biased perspective.

Randomness is often just a path waiting to be discovered.

The Baemax Sandbox

Baemax is my personal innovation lab —

a place to explore ideas at the intersection of

finance, engineering, and public policy,

and to test whether they hold up when pushed beyond theory.

Baemax is not separate from my operating work —

it is how I pressure-test ideas before they appear

inside real organisations, real budgets, and real teams.

While the individual projects evolve, the underlying lens does not.

Across domains, my work follows the same pattern:

identify a systemic failure, understand the incentives and constraints,

and design a structure that improves outcomes.

The aim is not domain expertise for its own sake,

but to see whether the same system-level principles —

incentives, scale effects, redundancy, and failure modes —

reappear when applied to very different problems.

Work published here broadly falls into three recurring categories:

-

Incentives & Policy Design —

exploring how fiscal, demographic, labour-market, and integration outcomes change

when incentives and capacity constraints are engineered rather than

treated as purely ideological or volume-driven problems

(e.g. Public Project Token,

Parental Benefit Account,

The Job Paradox,

migration absorption and integration dynamics).

-

Risk, Resilience & Concentration —

examining how systems fail when scale, correlation, or control-plane

concentration erodes assumed redundancy

(e.g. Inlet Shield,

deterrence dynamics, cloud concentration risk).

-

Information, Signal & Decision Systems —

understanding how edge shifts when information is abundant,

cognition is constrained, and advantage depends on compression,

framing, and disciplined judgment